Ensemble studying can assist you make higher selections and resolve many real-life challenges by combining selections from a number of fashions.

Machine studying (ML) continues increasing its wings in a number of sectors and industries, whether or not it’s finance, medication, app improvement, or safety.

Coaching ML fashions correctly will assist you to obtain better success in your enterprise or job function, and there are numerous strategies to attain that.

On this article, I’ll focus on ensemble studying, its significance, use instances, and methods.

Keep tuned!

What Is Ensemble Studying?

In machine studying and statistics, “ensemble” refers to strategies producing varied hypotheses whereas utilizing a typical base learner.

And ensemble studying is a machine studying strategy the place a number of fashions (like specialists or classifiers) are strategically created and mixed with the goal of fixing a computational downside or making higher predictions.

This strategy seeks to enhance the prediction, perform approximation, classification, and so on., efficiency of a given mannequin. It’s additionally used to eradicate the potential for you selecting a poor or much less helpful mannequin out of many. To attain improved predictive efficiency, a number of studying algorithms are used.

Ensemble Studying in ML

In machine studying fashions, there are some sources like bias, variance, and noise that will trigger errors. Ensemble studying can assist scale back these error-causing sources and make sure the stability and accuracy of your ML algorithms.

Listed below are why ensemble studying is being utilized in varied situations:

Selecting the Proper Classifier

Ensemble studying helps you select a greater mannequin or classifier whereas decreasing the chance that will outcome because of poor mannequin choice.

There are several types of classifiers used for various issues, comparable to help vector machines (SVM), multilayer perceptron (MLP), naive Bayes classifiers, determination timber, and so on. As well as, there are totally different realizations of classification algorithms that it is advisable select. The efficiency of various coaching knowledge will be totally different as properly.

However as an alternative of choosing only one mannequin, when you use an ensemble of all these fashions and mix their particular person outputs, you could keep away from deciding on poorer fashions.

Information Quantity

Many ML strategies and fashions are usually not that efficient of their outcomes when you feed them insufficient knowledge or a big quantity of knowledge.

Alternatively, ensemble studying can work in each situations, even when the information quantity is just too little or an excessive amount of.

- If there’s insufficient knowledge, you should use bootstrapping to coach varied classifiers with the assistance of various bootstrap knowledge samples.

- If there’s a giant knowledge quantity that may make the coaching of a single classifier difficult, then can strategically partition knowledge into smaller subsets.

Complexity

A single classifier may not be capable of resolve some extremely complicated issues. Their determination boundaries separating knowledge of assorted courses is perhaps extremely complicated. So, when you apply a linear classifier to a non-linear, complicated boundary, it gained’t be capable of be taught it.

Nevertheless, upon correctly combining an ensemble of appropriate, linear classifiers, you may make it be taught a given nonlinear boundary. The classifier will divide the information into many easy-to-learn and smaller partitions, and every classifier will be taught only one less complicated partition. Subsequent, totally different classifiers shall be mixed to supply an approx. determination boundary.

Confidence Estimation

In ensemble studying, a vote of confidence is assigned to a call {that a} system has made. Suppose you could have an ensemble of assorted classifiers educated on a given downside. If nearly all of classifiers do agree with the choice made, its final result will be considered an ensemble with a high-confidence determination.

Alternatively, if half of the classifiers don’t agree with the choice made, it’s stated to be an ensemble with a low-confidence determination.

Nevertheless, low or excessive confidence shouldn’t be all the time the right determination. However there’s a excessive probability {that a} determination with excessive confidence shall be right if the ensemble is correctly educated.

Accuracy with Information Fusion

Information collected from a number of sources, when mixed strategically, can enhance the accuracy of classification selections. This accuracy is greater than the one made with the assistance of a single knowledge supply.

How Does Ensemble Studying Work?

Ensemble studying takes a number of mapping features that totally different classifiers have realized after which combines them to create a single mapping perform.

Right here’s an instance of how ensemble studying works.

Instance: You’re making a food-based utility for the tip customers. To supply a high-quality consumer expertise, you need to acquire their suggestions concerning the issues they face, distinguished loopholes, errors, bugs, and so on.

For this, you may ask the opinions of your loved ones, associates, co-workers, and different folks with whom you talk regularly concerning their meals selections and their expertise of ordering meals on-line. You can even launch your utility in beta to gather real-time suggestions with no bias or noise.

So, what you’re truly doing right here is contemplating a number of concepts and opinions from totally different folks to assist enhance the consumer expertise.

Ensemble studying and its fashions work in an identical approach. It makes use of a set of fashions and combines them to supply a last output to enhance prediction accuracy and efficiency.

Fundamental Ensemble Studying Methods

#1. Mode

A “mode” is a price showing in a dataset. In ensemble studying, ML professionals use a number of fashions to create predictions about each knowledge level. These predictions are thought of particular person votes and the prediction that almost all fashions have made is taken into account the ultimate prediction. It’s largely utilized in classification issues.

Instance: 4 folks rated your utility 4 whereas one among them rated it 3, then the mode could be 4 for the reason that majority voted 4.

#2. Common/Imply

Utilizing this system, professionals have in mind all of the mannequin predictions and calculate their common to provide you with the ultimate prediction. It’s largely utilized in making predictions for regression issues, calculating possibilities in classification issues, and extra.

Instance: Within the above instance, the place 4 folks rated your app 4 whereas one particular person rated it 3, the typical could be (4+4+4+4+3)/5=3.8

#3. Weighted Common

On this ensemble studying methodology, professionals allocate totally different weights to totally different fashions for making a prediction. Right here, the allotted weight describes every mannequin’s relevance.

Instance: Suppose 5 people offered suggestions in your utility. Out of them, 3 are utility builders, whereas 2 don’t have any app improvement expertise. So, the suggestions of these 3 folks shall be given extra weightage than the remainder 2.

Superior Ensemble Studying Methods

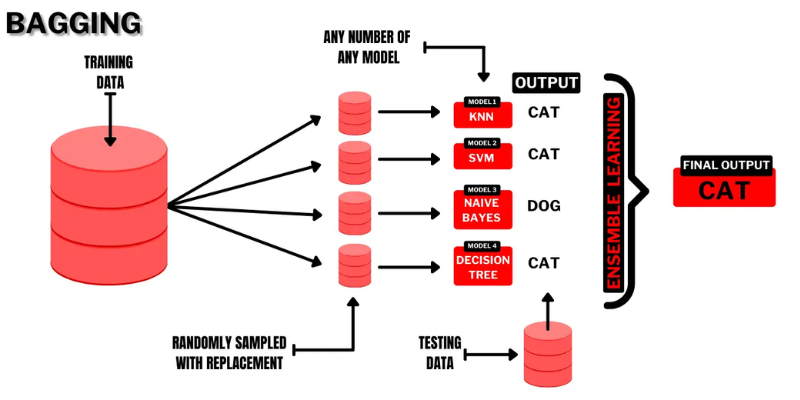

#1. Bagging

Bagging (Bootstrap AGGregatING) is a extremely intuitive and easy ensemble studying approach with efficiency. Because the title suggests, it’s made by combining two phrases “Bootstrap” and “aggregation”.

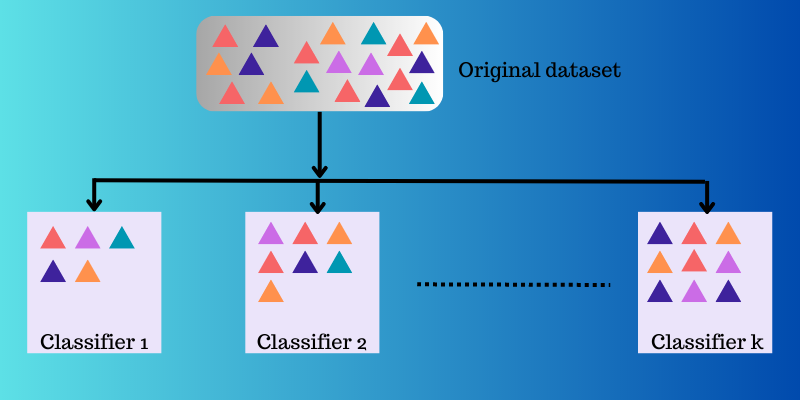

Bootstrapping is one other sampling methodology the place you will have to create subsets of a number of observations taken from an unique knowledge set with alternative. Right here, the subset measurement would be the similar as that of the unique knowledge set.

So, in bagging, subsets or baggage are used to grasp the distribution of the entire set. Nevertheless, the subsets may very well be smaller than the unique knowledge set in bagging. This methodology entails a single ML algorithm. The goal of mixing totally different fashions’ outcomes is to acquire a generalized final result.

Right here’s how bagging works:

- A number of subsets are generated from the unique set and observations are chosen with replacements. The subsets are used within the coaching of fashions or determination timber.

- A weak or base mannequin is created for every subset. The fashions shall be impartial of each other and run in parallel.

- The ultimate prediction shall be made by combining every prediction from each mannequin utilizing statistics like averaging, voting, and so on.

Fashionable algorithms used on this ensemble approach are:

- Random forest

- Bagged determination timber

The benefit of this methodology is that it helps maintain variance errors to the minimal in determination timber.

#2. Stacking

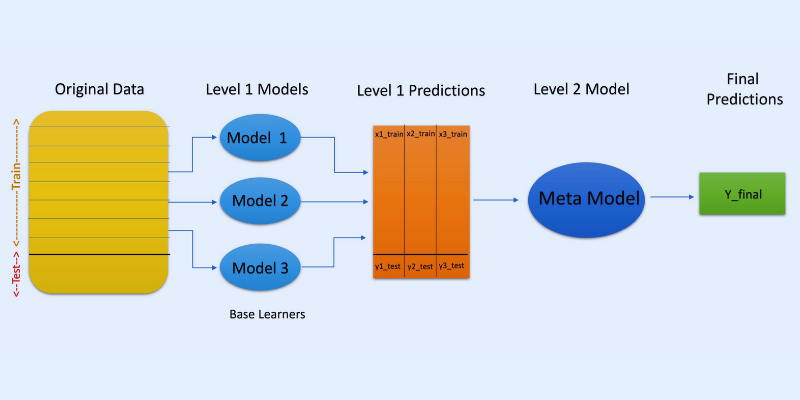

In stacking or stacked generalization, predictions from totally different fashions, like a call tree, are used to create a brand new mannequin to make predictions on this take a look at set.

Stacking entails the creation of bootstrapped subsets of knowledge for coaching fashions, just like bagging. However right here, the output of fashions is taken as an enter to be fed to a different classifier, often known as a meta-classifier for the ultimate prediction of the samples.

The explanation why two classifier layers are used is to find out if the coaching knowledge units are realized appropriately. Though the two-layered strategy is widespread, extra layers will also be used.

As an illustration, you should use 3-5 fashions within the first layer or level-1 and a single mannequin in layer 2 or stage 2. The latter will mix the predictions obtained in stage 1 to make the ultimate prediction.

Moreover, you should use any ML studying mannequin for aggregating predictions; a linear mannequin like linear regression, logistic regression, and so on., is widespread.

Fashionable ML algorithms utilized in stacking are:

- Mixing

- Tremendous ensemble

- Stacked fashions

Observe: Mixing makes use of a validation or holdout set from the coaching dataset for making predictions. In contrast to stacking, mixing entails predictions to be made solely from the holdout.

#3. Boosting

Boosting is an iterative ensemble studying methodology that adjusts a selected statement’s weight relying on its final or earlier classification. This implies each subsequent mannequin goals at correcting the errors discovered within the earlier mannequin.

If the statement shouldn’t be categorized appropriately, then boosting will increase the burden of the statement.

In boosting, professionals prepare the primary algorithm for enhancing on a whole dataset. Subsequent, they construct the next ML algorithms by utilizing the residuals extracted from the earlier boosting algorithm. Thus, extra weight is given to the inaccurate observations predicted by the earlier mannequin.

Right here’s the way it works step-wise:

- A subset shall be generated out of the unique knowledge set. Each knowledge level could have the identical weights initially.

- Making a base mannequin takes place on the subset.

- The prediction shall be made on the entire dataset.

- Utilizing the precise and predicted values, errors shall be calculated.

- Incorrectly predicted observations shall be given extra weights

- A brand new mannequin shall be created and the ultimate prediction shall be made on this knowledge set, whereas the mannequin tries to right the beforehand made errors. A number of fashions shall be created in an identical approach, every correcting the earlier errors

- The ultimate prediction shall be comprised of the ultimate mannequin, which is the weighted imply of all of the fashions.

Fashionable boosting algorithms are:

- CatBoost

- Mild GBM

- AdaBoost

The advantage of boosting is that it generates superior predictions and reduces errors because of bias.

Different Ensemble Methods

A combination of Consultants: it’s used to coach a number of classifiers, and their outputs are ensemble with a basic linear rule. Right here, the weights given to the combos are decided by a trainable mannequin.

Majority voting: it entails selecting an odd classifier, and predictions are computed for every pattern. The category receiving the utmost class out of a classifier pool would be the predicted class of the ensemble. It’s used for fixing issues like binary classification.

Max Rule: it makes use of the likelihood distributions of every classifier and employs confidence in making predictions. It’s used for multi-class classification issues.

Use Instances of Ensemble Studying

#1. Face and emotion detection

Ensemble studying makes use of methods like impartial part evaluation (ICA) to carry out face detection.

Furthermore, ensemble studying is utilized in detecting the emotion of an individual by speech detection. As well as, its capabilities assist customers carry out facial emotion detection.

#2. Safety

Fraud detection: Ensemble studying helps improve the ability of regular habits modeling. For this reason it’s deemed to be environment friendly in detecting fraudulent actions, for example, in bank card and banking programs, telecommunication fraud, cash laundering, and so on.

DDoS: Distributed denial of service (DDoS) is a lethal assault on an ISP. Ensemble classifiers can scale back error detection and in addition discriminate assaults from real site visitors.

Intrusion detection: Ensemble studying can be utilized in monitoring programs like intrusion detection instruments to detect intruder codes by monitoring networks or programs, discovering anomalies, and so forth.

Detecting malware: Ensemble studying is sort of efficient in detecting and classifying malware code like laptop viruses and worms, ransomware, trojan horses, adware, and so on. utilizing machine studying methods.

#3. Incremental Studying

In incremental studying, an ML algorithm learns from a brand new dataset whereas retaining earlier learnings however with out accessing earlier knowledge that it has seen. Ensemble programs are utilized in incremental studying by making it be taught an added classifier on each dataset because it turns into out there.

#4. Drugs

Ensemble classifiers are helpful within the area of medical prognosis, such because the detection of neuro-cognitive issues (like Alzheimer’s). It performs detection by taking MRI datasets as inputs and classifying cervical cytology. Aside from that, it’s utilized in proteomics (research of proteins), neuroscience, and different areas.

#5. Distant Sensing

Change detection: Ensemble classifiers are used to carry out change detection by strategies like Bayesian common and majority voting.

Mapping land cowl: Ensemble studying strategies like boosting, determination timber, kernel principal part evaluation (KPCA), and so on. are getting used to detect and map land cowl effectively.

#6. Finance

Accuracy is a important facet of finance, whether or not it’s calculation or prediction. It extremely influences the output of the choices you make. These may analyze modifications in inventory market knowledge, detect manipulation in inventory costs, and extra.

Extra Studying Sources

#1. Ensemble Strategies for Machine Studying

This guide will assist you to be taught and implement vital strategies of ensemble studying from scratch.

| Preview | Product | Score | Value | |

|---|---|---|---|---|

|

|

Ensemble Strategies for Machine Studying | $53.57 | Purchase on Amazon |

#2. Ensemble Strategies: Foundations and Algorithms

This guide has the fundamentals of ensemble studying and its algorithms. It additionally outlines the way it’s utilized in the actual world.

| Preview | Product | Score | Value | |

|---|---|---|---|---|

|

|

Ensemble Strategies: Foundations and Algorithms (Chapman & Corridor/CRC Machine Studying & Sample… | $99.18 | Purchase on Amazon |

#3. Ensemble Studying

It provides an introduction to a unified ensemble methodology, challenges, functions, and so on.

| Preview | Product | Score | Value | |

|---|---|---|---|---|

|

|

Ensemble Studying: Sample Classification Utilizing Ensemble Strategies (Second Version) (Machine… | $86.62 | Purchase on Amazon |

#4. Ensemble Machine Studying: Strategies and Functions:

It gives huge protection of superior ensemble studying methods.

| Preview | Product | Score | Value | |

|---|---|---|---|---|

|

|

Ensemble Machine Studying: Strategies and Functions | $34.62 | Purchase on Amazon |

Conclusion

I hope you now have some concept about ensemble studying, its strategies, use instances, and why utilizing it may be useful to your use case. It has the potential to resolve many real-life challenges, from the area of safety and app improvement to finance, medication, and extra. Its makes use of are increasing, so there’s prone to be extra enchancment on this idea within the close to future.

You might also discover some instruments for artificial knowledge era to coach machine studying fashions