Laptop utilization is at its peak lately and continues to rise. Over the previous thirty years, machines have advanced and improved enormously, particularly by way of processing energy and multitasking.

Are you able to even think about how insane the efficiency enhance may very well be if the duties are cut up throughout a number of machines and run in parallel? That is referred to as distributed computing. It is like teamwork for computer systems.

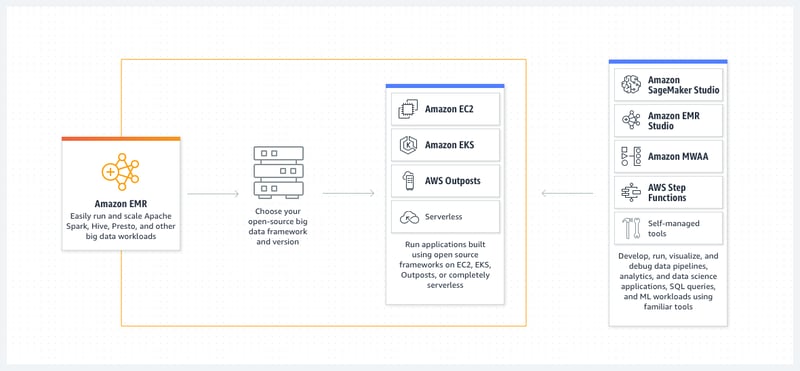

You could be questioning why we’re discussing this distributed computing factor. As a result of distributed computing and Amazon EMR (Elastic MapReduce) are intently associated. That’s, AWS EMR makes use of distributed computing ideas to course of and analyze massive quantities of information within the cloud.

With Amazon EMR, now you can analyze and course of huge knowledge utilizing a distributed processing framework of your selection on S3 cases.

How Amazon EMR works?

First, feed the information into a knowledge retailer resembling Amazon S3, DynamoDB, or different AWS storage platforms, as all of them combine effectively with the EMR.

Now you want a giant knowledge framework to course of and analyze this knowledge. With a number of huge knowledge frameworks to select from, resembling Apache Spark, Hadoop, Hive, and Presto, you’ll be able to decide the one which fits your wants and add it to the chosen datastore.

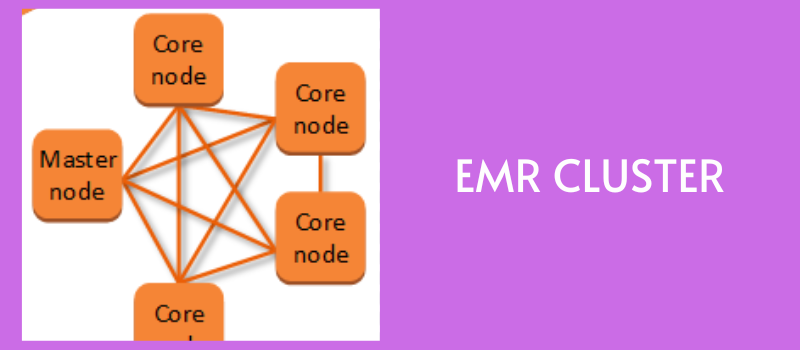

An EMR cluster of EC2 cases is created to course of and analyze the information in parallel. You may configure the variety of nodes and different particulars to create the cluster.

Your major storage distributes the information and frameworks to those nodes, the place the information chunks are processed individually and the outcomes mixed.

As soon as the outcomes are recognized, you’ll be able to terminate the cluster to launch all allotted assets.

Advantages of Amazon EMR

Firms, small or massive, all the time take into account implementing cost-effective options. So why not an reasonably priced Amazon EMR? When it may possibly simplify operating varied huge knowledge frameworks on AWS, making it a handy option to course of and analyze your knowledge whereas saving some cash.

✅ Elasticity: You may guess its nature by way of the time period ‘Elastic MapReduce’. The time period reads: Based mostly on the necessities, Amazon EMR lets you simply resize the clusters manually or mechanically. For instance, it’s possible you’ll now want 200 copies to course of your requests, and this might improve to 600 copies in an hour or two. So Amazon EMR is greatest in case you simply want scalability to adapt to speedy modifications in demand.

✅ Knowledge storage: Whether or not it is Amazon S3, the Hadoop distributed file system, Amazon DynamoDB, or different AWS datastores, Amazon EMR integrates seamlessly with it.

✅ Knowledge processing instruments: Amazon EMR helps a number of huge knowledge frameworks, together with Apache Spark, Hive, Hadoop, and Presto. As well as, you’ll be able to run deep studying and machine studying algorithms and instruments on this framework.

✅ Price environment friendly: Not like different business merchandise, Amazon EMR solely lets you pay for the assets you employ per hour. As well as, you’ll be able to select from completely different pricing fashions to fit your funds.

✅ Cluster Adjustment: The framework lets you customise every occasion of your cluster. You too can affiliate a giant knowledge framework with an ideal cluster sort. For instance, Apache Spark and Graviton2 based mostly cases are a deadly mixture for optimized efficiency within the EMR.

✅ Entry Controls: You need to use AWS Identification and Entry Administration (IAM) instruments to handle permissions within the EMR. For instance, you’ll be able to permit particular customers to edit the cluster, whereas others can solely view the cluster.

✅ Integration: The combination of EMR with all different AWS providers is seamless. It provides you the facility of digital servers, strong safety, expandable capability and analytics within the EMR.

Use instances from Amazon EMR

#1. Machine studying

Analyze the information utilizing machine studying and deep studying in Amazon EMR. For instance, operating completely different algorithms on health-related knowledge to trace a number of well being metrics resembling physique mass index, coronary heart charge, blood stress, physique fats proportion, and so on. is essential to creating a health tracker. All this may be carried out sooner and extra effectively on EMR cases.

#2. Carry out main transformations

Retailers usually gather a considerable amount of digital knowledge to investigate buyer habits and enhance the enterprise. Alongside the identical traces, Amazon EMR will likely be environment friendly in gathering huge knowledge and executing huge transformations utilizing Spark.

#3. Knowledge mining

Do you wish to sort out a dataset that takes a very long time to course of? Amazon EMR is unique for knowledge mining and predictive analytics of advanced knowledge units, particularly in unstructured knowledge instances. As well as, the cluster structure is right for parallel processing.

#4. Analysis functions

Do your analysis with this cost-effective and environment friendly framework referred to as Amazon EMR. As a consequence of its scalability, you hardly ever see efficiency points when operating massive datasets on EMR. Thus, this framework could be very appropriate for giant knowledge analysis and evaluation laboratories.

#5. Actual-time streaming

One other beauty of Amazon EMR is its assist for real-time streaming. Construct scalable real-time streaming knowledge pipelines for on-line gaming, video streaming, visitors monitoring, and inventory buying and selling with Apache Kafka and Apache Flink on Amazon EMR.

How is the EMR completely different from Amazon Glue and Redshift?

AWS EMR vs Glue

The 2 highly effective AWS providers – Amazon EMR and Amazon Glue have gained a loyal following in coping with your knowledge.

Extracting knowledge from varied sources, remodeling it and loading it into the information warehouses is quick and environment friendly with Amazon Glue, whereas Amazon EMR helps you course of your huge knowledge functions utilizing Hadoop, Spark, Hive, and so on.,

Principally, AWS Glue lets you gather knowledge and put together it for evaluation, and the Amazon EMR lets you course of it.

EMR versus redshift

Think about constantly navigating your knowledge and retrieving it with ease. SQL is one thing you usually use to do that. In the identical vein, Redshift gives optimized on-line analytical processing providers to simply question massive quantities of information utilizing SQL.

When storing knowledge, you’ll be able to entry extremely scalable, safe, and out there Amazon EMR storage suppliers resembling S3 and DynamoDB. Redshift, then again, has its personal knowledge layer, which lets you retailer knowledge in columnar format.

Amazon EMR value optimization approaches

#1. Include formatted knowledge

The bigger the information, the longer it takes to course of. As well as, coming into uncooked knowledge instantly into the cluster makes it much more advanced, taking extra time to search out the half you wish to course of.

Thus, the formatted knowledge comes with metadata about columns, knowledge sort, measurement, and extra, which may prevent time on searches and aggregations.

Additionally cut back the information measurement by utilizing knowledge compression strategies as it’s comparatively simpler to course of smaller knowledge units.

#2. Make the most of reasonably priced storage providers

Through the use of cost-effective major storage providers, you’ll be able to cut back your massive EMR bills. Amazon s3 is an easy and reasonably priced storage service for storing enter and output knowledge. The pay-as-you-go mannequin solely fees for the precise space for storing you’ve got used.

#3. Measurement of the fitting occasion

Utilizing the fitting cases with the fitting dimensions can considerably cut back the funds you spend on EMR. The EC2 cases are usually charged per second and the value varies with their measurement, however whether or not you employ a .7x massive cluster or a .36x massive cluster, the price of managing them is identical. The environment friendly deployment of bigger machines is due to this fact cost-effective in comparison with the usage of a number of small machines.

#4. Spot cases

Spot cases are an important choice to purchase unused EC2 assets at a reduction. In comparison with On-demand ones, these are cheaper however are usually not everlasting as they are often reclaimed when demand will increase. So these are versatile for fault tolerance, however not appropriate for long-running duties.

#5. Scale mechanically

The auto-scaling function is all it’s worthwhile to keep away from clusters which might be too massive or too small. This lets you select the fitting quantity and sort of cases in your cluster based mostly on workload, optimizing prices.

Final phrases

There is no such thing as a finish to cloud and large knowledge know-how, providing you with countless instruments and frameworks to be taught and implement. One such platform that may leverage each huge knowledge and the cloud is Amazon EMR, because it simplifies operating huge knowledge frameworks for processing and analyzing huge knowledge.

That will help you get began with the EMR, this text will present you what it’s, its advantages, the way it works, its use instances, and cost-effective approaches it gives.

Subsequent, try every little thing it’s worthwhile to learn about AWS Athena.