web optimization Log File Evaluation assist perceive the crawler’s conduct on the web site and determine potential technical web optimization optimization alternatives.

web optimization with out analyzing the crawlers’ conduct is like flying blind. You could have submitted the web site on Google search console and listed it, however with out finding out the log recordsdata, you gained’t get an concept in case your web site is getting crawled or learn by search engine bots correctly or not.

That’s why I’ve assembled every thing it’s essential know to research web optimization log recordsdata and determine points and web optimization alternatives from them.

What’s Log Recordsdata Evaluation?

web optimization Log Recordsdata Evaluation is a course of to acknowledge the sample of search engine bots’ interplay with the web site. Logfile evaluation is part of technical web optimization.

Log recordsdata auditing is essential for SEOs to acknowledge and resolve the problems associated to crawling, indexing, standing codes.

What are Log Recordsdata?

Log recordsdata monitor who visits a web site and what content material they view. They comprise details about who requested entry to the web site (also called ‘The Consumer’).

The knowledge perceived will be associated to look engine bots like Google or Bing or a web site customer. Sometimes, log file data are collected and maintained by the positioning’s net server, and they’re normally stored for a sure period of time.

What does Log File Include?

Earlier than figuring out the significance of log recordsdata for web optimization, it’s important to know what’s there inside this file. The log file e incorporates the next information factors:-

- Web page URL which the web site customer is requesting

- The HTTP standing code of the web page

- Requested server IP tackle

- Date and time of the hit

- Information of the person agent (search engine bot) making a request

- Request technique (GET/POST)

Log recordsdata can appear difficult to you in the event you take a look at them first. Nonetheless, as soon as you already know the aim and significance of log recordsdata for web optimization, you’ll use them successfully to generate worthwhile web optimization insights.

Objective of Log Recordsdata Evaluation for web optimization

Logfile evaluation helps resolve among the essential technical web optimization points, which lets you create an efficient web optimization technique to optimize the web site.

Listed here are some web optimization points which will be analyzed utilizing log recordsdata:

#1. Frequency of Googlebot crawling the web site

Search engine bots or crawlers ought to crawl your essential pages steadily in order that the search engine is aware of about your web site updates or new content material.

Your essential product or data pages ought to all seem in Google’s logs. A product web page for a product that you just now not promote, in addition to the absence of any of your most essential class pages, are indicators of an issue that may be acknowledged utilizing log recordsdata.

How does a search engine bot make the most of the crawl finances?

Every time a search engine crawler visits your web site, it has a restricted “crawl finances.” Google defines a crawl finances because the sum of a web site’s crawl charge and crawls demand.

Crawling and indexing of a web site could also be hampered if it has many low-value URLs or URLs that aren’t accurately submitted within the sitemap. Crawling and indexing key pages is simpler in case your crawl finances is optimized.

Logfile evaluation helps optimize the crawl finances that accelerates the web optimization efforts.

#2. Cellular-first indexing points and standing

Cellular-first indexing is now essential for all web sites, and Google prefers it. Logfile evaluation will let you know the frequency with which smartphone Googlebot crawls your web site.

This evaluation helps site owners to optimize the webpages for cell variations if the pages aren’t being accurately crawled by smartphone Googlebot.

#3. HTTP standing code returned by net pages when requested

Latest response codes our webpages are returning will be retrieved both by log recordsdata or utilizing the fetch and render request choice in Google Search Console.

Log recordsdata analyzers can discover the pages with the 3xx, 4xx, and 5xx codes. You possibly can resolve these points by taking the suitable motion, for instance, redirecting the URLs to the right locations or altering 302 staus coded to 301.

#4. Analyzing the crawl actions like crawl depth or inside hyperlinks

Google appreciates your web site construction based mostly on its crawl depth and inside hyperlinks. Causes behind improper crawling of the web site will be unhealthy interlink construction and crawl depth.

If in case you have any difficulties along with your web site’s hierarchy or web site construction, or interlink construction, you might use log file evaluation to search out them.

Logfile evaluation helps optimize the web site structure and interlink construction.

#4. Uncover Orphaned Pages

Orphaned pages are the online pages on the web site which aren’t linked from every other web page. It’s troublesome for such pages to get listed or seem in search engines like google and yahoo as they don’t seem to be simply found by bots.

Orphaned pages will be simply found by crawlers like Screaming Frog, and this situation will be resolved by interlinking these pages to the opposite pages on the web site.

#5. Audit the Pages for Web page Velocity and Expertise

Web page expertise and core net vitals are formally the rating elements now, and it’s important now that webpages are compliant with Google web page pace pointers.

Gradual or giant pages will be found utilizing log file analyzers, and these pages will be optimized for web page pace that can assist total rating on the SERP.

Log File Evaluation helps you achieve management over how your web site is crawled and the way search engines like google and yahoo are dealing with your web site

Now, as we’re clear with the fundamentals of log recordsdata and their evaluation, let’s take a look at the method of auditing the log recordsdata for web optimization

How one can do Log File Evaluation

We’ve got already checked out totally different features of log recordsdata and the significance of web optimization. Now, it’s time to be taught the method of analyzing the recordsdata and the very best instruments to research the log recordsdata.

You will want entry to the web site’s server log file to entry the log file. The recordsdata will be analyzed within the following methods:

- Manually utilizing Excel or different information visualization instruments

- Utilizing the log file evaluation instruments

There are totally different steps concerned in accessing the log recordsdata manually.

- Accumulate or export the log information from the webserver, and the information must be filtered for the search engine bots or crawlers.

- Convert the downloaded file right into a readable format utilizing information evaluation instruments.

- Manually analyze the information utilizing excel or different visualization instruments to search out web optimization gaps and alternatives.

- You may as well use filtering packages and command strains to make your job straightforward

Manually engaged on recordsdata’ information is just not straightforward because it requires data of Excel and includes the event crew. Nonetheless, instruments for log file evaluation make the job straightforward for SEOs.

Let’s take a look at the highest instruments for auditing the log recordsdata and perceive how these instruments assist us analyze the log recordsdata.

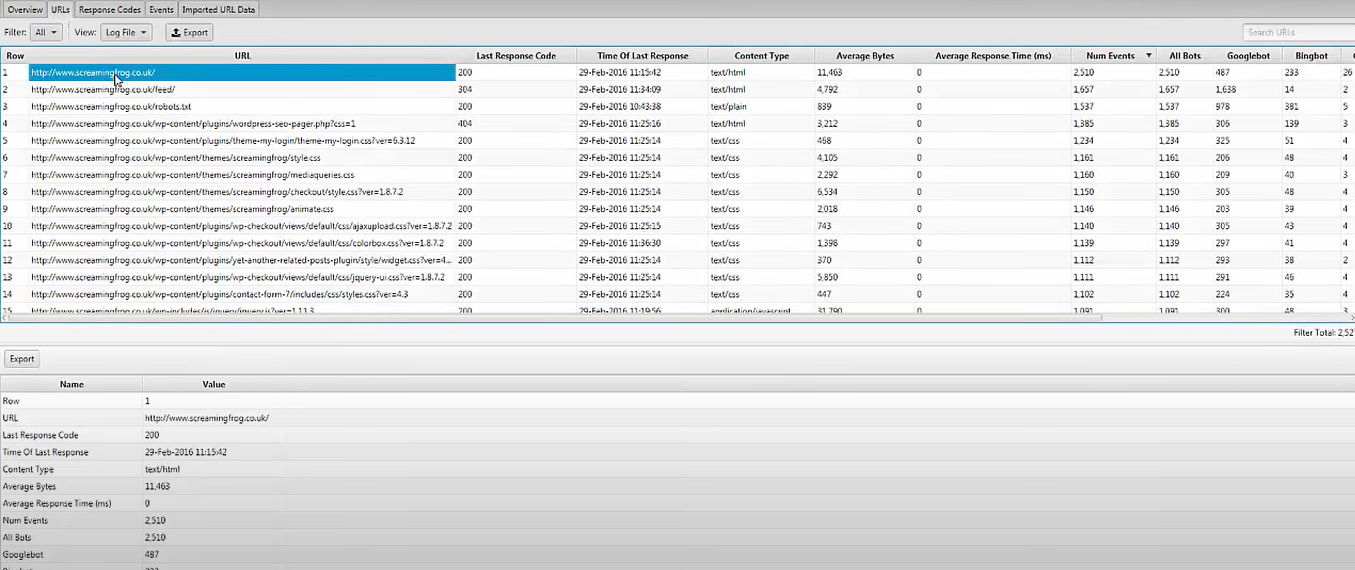

Screaming Frog Log File Analyzer

Technical web optimization issues will be recognized utilizing uploaded log file information and search engine bots verified utilizing the Screaming Frog Log File Analyzer. You may as well do as follows:

- Search engine bot exercise and information for search engine marketing.

- Uncover the web site crawl frequency by search engine bots

- Discover out about all the technical web optimization points and exterior & inside damaged hyperlinks

- Evaluation of URLs which have been crawled the least and essentially the most to cut back loss and enhance effectivity.

- Uncover pages that aren’t being crawled by search engines like google and yahoo.

- Any information will be in contrast and mixed, that features exterior hyperlink information, directives, and different data.

- View the information about referer URLs

Screaming Frog log file analyzer software is totally free to make use of for a single venture with a restrict of 1000 line log occasions. You’ll must improve to the paid model in order for you limitless entry and technical help.

JetOctopus

In the case of inexpensive log analyzer instruments, JetOctopus is greatest. It has a seven-day free trial, no bank card required, and a two-click connection. Crawl frequency, crawl finances, hottest pages, and extra could all be recognized utilizing JetOctopus log analyzer, similar to the opposite instruments on our record.

With this software, you possibly can combine log file information with Google Search Console information, supplying you with a definite benefit over the competitors. With this combo, you’ll be capable of see how Googlebot interacts along with your web site and the place you might enhance.

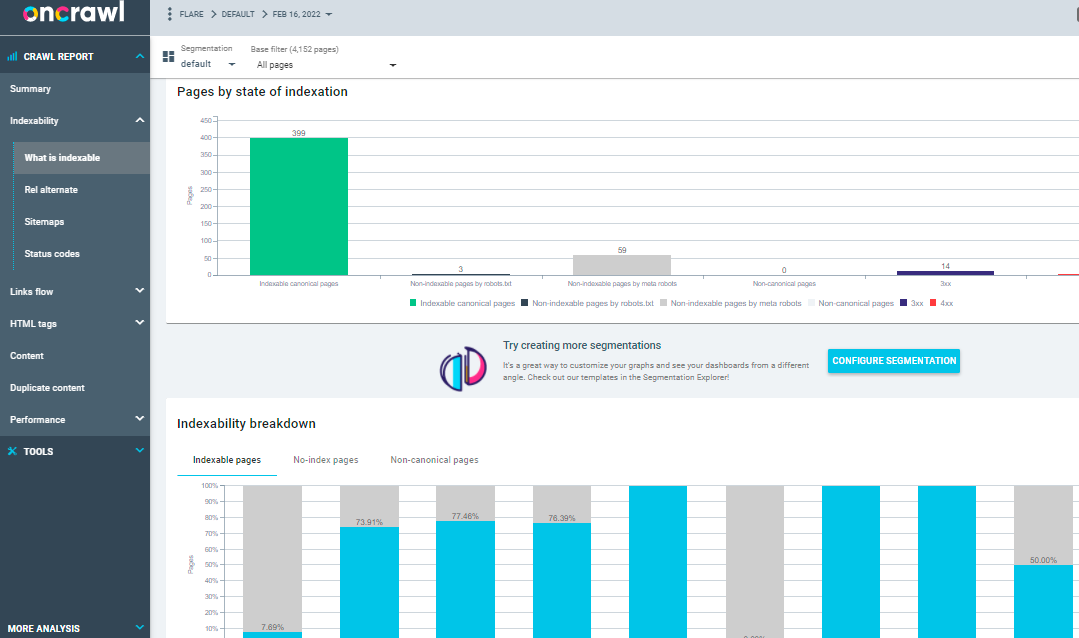

On Crawl Log Analyzer

Over 500 million log strains a day are processed by Oncrawl Log Analyzer, a software designed for medium to large web sites. It retains a watch in your net server logs in real-time to make sure your pages are being correctly listed and crawled.

Oncrawl Log Analyzer is GDPR-compliant and extremely safe. As an alternative of IP addresses, this system shops all log recordsdata in a safe and segregated FTP cloud.

In addition to JetOctopus and Screaming Frog Log File Analyzer, Oncrawl has some extra options, comparable to:

- Helps many log codecs, comparable to IIS, Apache, and Nginx.

- Instrument simply adapts to your processing and storage necessities as they alter

- Dynamic segmentation is a robust software for uncovering patterns and connections in your information by grouping your URLs and inside hyperlinks based mostly on numerous standards.

- Use information factors out of your uncooked log recordsdata to create actionable web optimization stories.

- Log recordsdata transferred to your FTP house will be automated with the assistance of tech workers.

- All the favored browsers will be monitored, that features Google, Bing, Yandex, and Baidu’s crawlers.

OnCrawl Log Analyzer has two extra essential instruments:

Oncrawl web optimization Crawler: With Oncrawl web optimization Crawler, you possibly can crawl your web site at excessive pace and with minimal sources. Improves the person’s comprehension of how rating standards have an effect on search engine marketing (web optimization).

Oncrawl Information: Oncrawl information analyzes all of the web optimization elements by combining information from the crawl and analytics. It fetches the information from the crawl and log recordsdata to grasp the crawl conduct and recommends the crawl finances to precedence content material or rating pages.

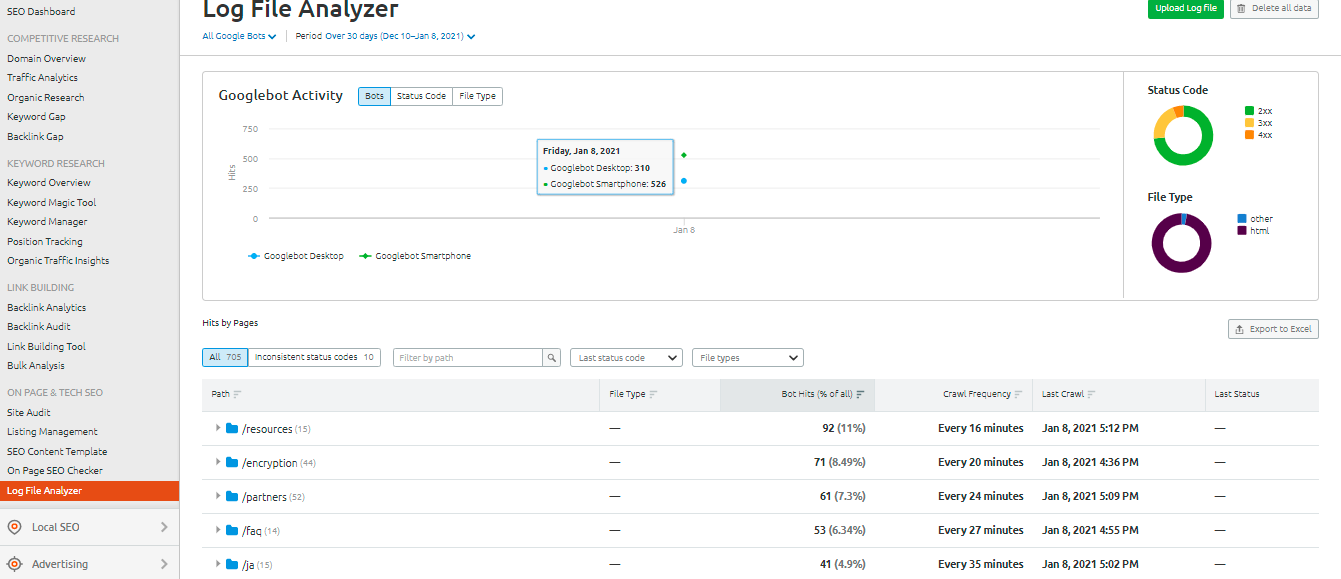

SEMrush Log File Analyzer

The SEMrush Log File Analyzer is a brilliant alternative for a simple, browser-based log evaluation software. This analyzer doesn’t require downloading and can be utilized within the on-line model.

SEMrush presents you two stories:

Pages’ Hits: Pages’ Hits stories the online crawlers’ interplay along with your web site’s content material. It provides you the information of pages, folders, and URLs with the utmost and minimal interactions with bots.

The exercise of the Googlebot: The Googlebot Exercise report offers the positioning associated insights each day, comparable to:

- The varieties of crawled recordsdata

- The general HTTP standing code

- The variety of requests made to your web site by numerous bots

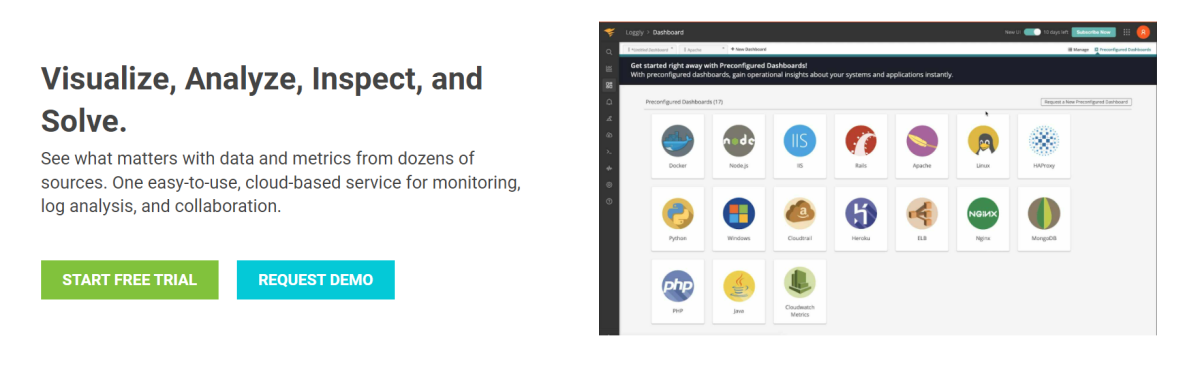

Loggly from SolarWinds

SolarWinds’ Loggly examines the entry and error logs of your net server, in addition to the positioning’s weekly metrics. You possibly can see your log information at any cut-off date, and it has options that make looking by means of logs easy.

A strong log file evaluation software like SolarWinds Loggly is required to effectively mine the log recordsdata in your net server for details about the success or failure of useful resource requests from purchasers.

Loggly can present charts displaying the least generally considered pages and compute common, minimal, and most web page load speeds to help you in optimizing your web site’s search engine marketing.

Google Search Console Crawl Stats

Google Search Console made issues simpler for customers by offering a helpful overview of its practices. The console’s operation is simple. Your crawl stats will then be divided into three classes:

- Kilobytes downloaded per day: It signifies the kilobytes being downloaded by Googlebots whereas visiting the web site. This mainly signifies two essential factors: If excessive averages are proven within the graph then it signifies that the positioning is crawled extra typically or it may additionally point out that the bot is taking a very long time to crawl a web site and it’s not light-weight.

- Pages crawled by day: It tells you the variety of pages Googlebot crawls every day. It additionally notes down the crawl exercise standing whether or not it’s low, excessive, or common. Low crawl charge signifies that the web site is just not crawled correctly by Googlebot

- Time spent downloading a web page (in milliseconds): This means the time taken by Googlebot to make HTTP requests whereas crawling the web site. Lesser the time Googlebot has to spend making requests, downloading the web page higher it is going to be as indexing might be sooner.

Conclusion

I hope you’ve got quite a bit out of this information on log recordsdata evaluation and instruments used to audit the log recordsdata for web optimization. Auditing the log recordsdata will be very efficient for bettering the technical web optimization features of the web site.

Google Search Console and SEMrush Log File Analyzer are two choices for a free and fundamental evaluation software. In its place, examine Screaming Frog Log File Analyzer, JetOctopus, or Oncrawl Log Analyzer to grasp higher how search engine bots work together along with your web site; you might use blended premium and free log recordsdata evaluation instruments for web optimization.

You might also take a look at some superior web site crawlers to enhance web optimization.